I paid for some of those Lensa AI profile images

I already had the Lensa app on my phone, so I paid $9.99 AUD for 100 of their AI generated “magic avatars” just to see what would happen.

For the source images, I purposefully included pictures without makeup and in bad lighting that truly show my age. And yet every image it spit out looks like me if I was 20 and a little bit prettier.

It was an unsurprising result from an app that people use to filter away any signs of ageing or skin texture, but I would have preferred if the results looked like the 36 year old me in the different styles! Oh well. It’s also funny to me that even though I included smiling pictures in my source images, almost every generated image ended up with my trademark RBF.

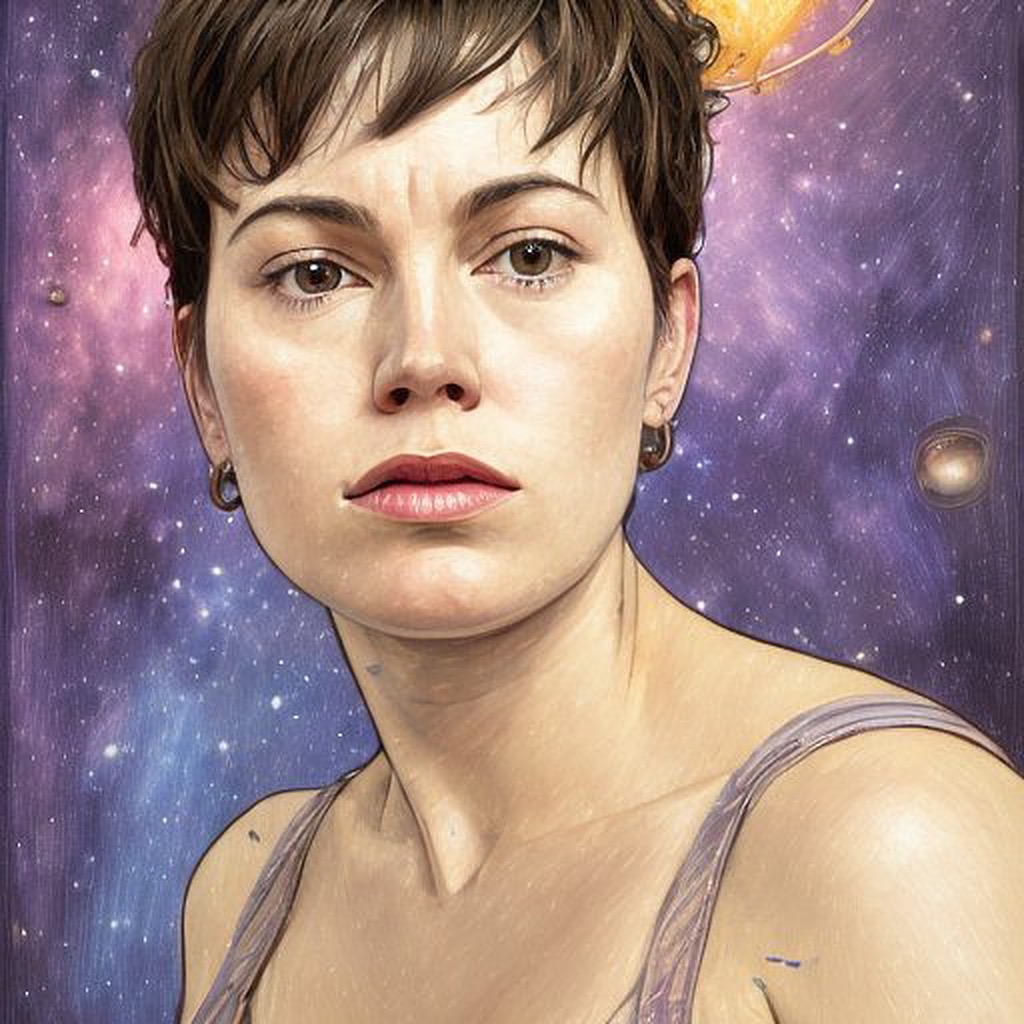

Of all the images I think this one that actually looks the most like me, and I like the starry background so I will probably swap it in for my profile picture on socials.

This was the best glitch

Update: 14th Dec 2022

Melissa Heikkilä shares the upsetting results of her Lensa AI images in her article for MIT Technology Review.

Of the 100 images I had generated, I noticed almost all of them had me in a tank top (even though I wasn’t wearing them in the source material). I thought “the model obviously thinks women should be in tank tops” just like it thought I should be prettier and younger.

Melissa’s images included actual nudes.

I got images of generic Asian women clearly modeled on anime or video-game characters. Or most likely porn, considering the sizable chunk of my avatars that were nude or showed a lot of skin. A couple of my avatars appeared to be crying. My white female colleague got significantly fewer sexualized images, with only a couple of nudes and hints of cleavage.

This is another example of how AI models that are trained on biased data will then perpetuate the same bias and stereotypes in the output it generates.

This leads to AI models that sexualize women regardless of whether they want to be depicted that way —especially women with identities that have been historically disadvantaged. — Aylin Caliskan

Comments

Leave a Comment

💯 Thanks for submitting your comment! It will appear here after it has been approved.